Kinetic Art Display

For the 2024 Formlabs Summer Hackathon, my team created a kinetic art display composed of 40 linear stepper motors embedded in the shells from two Form 2s. I was the sole integration and software engineer, in charge of installing firmware on the five MKS Monster 8 v2 boards to control all 40 motors and writing software to control the boards over serial with a Raspberry Pi.

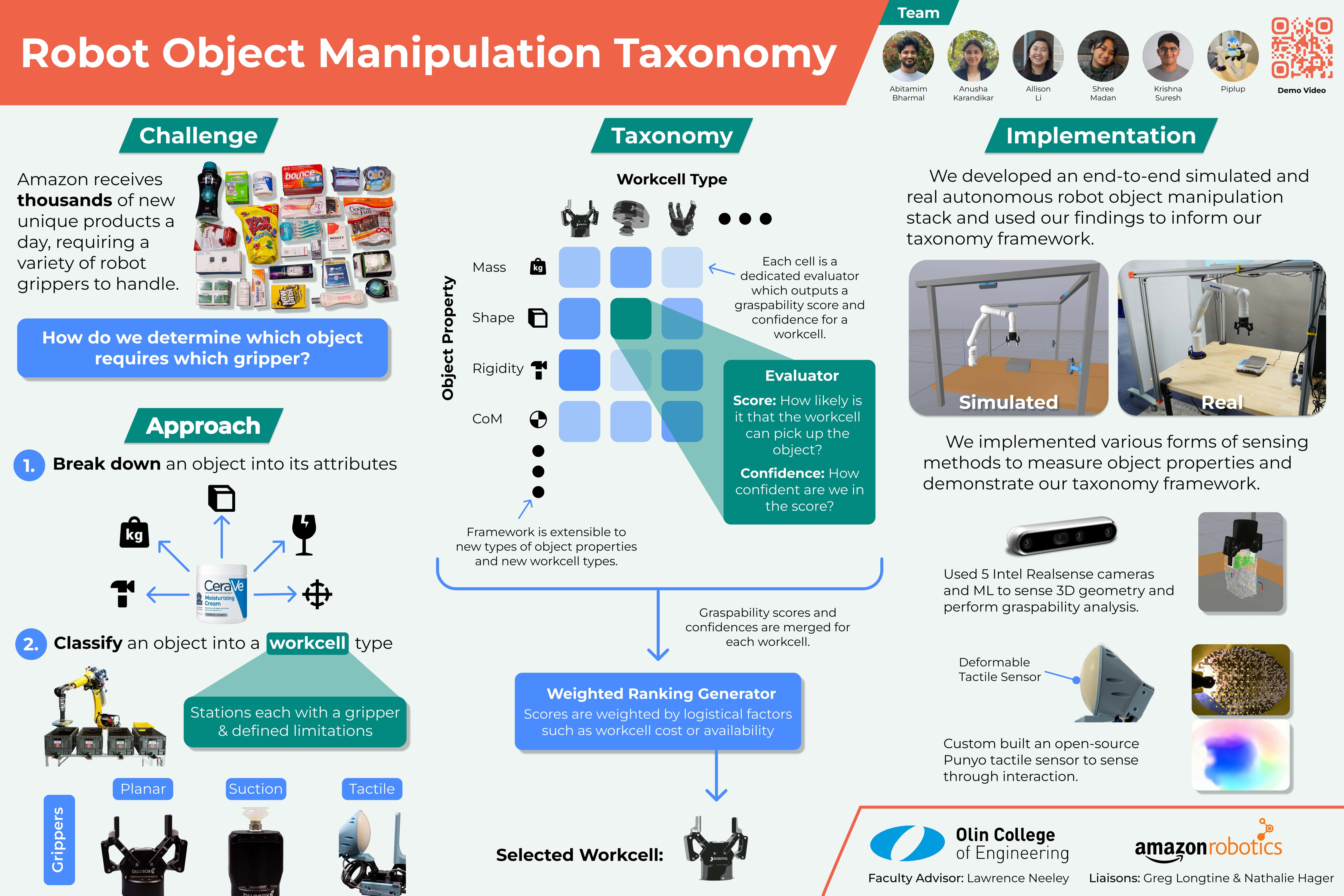

Robot Object Manipulation Taxonomy | Amazon Robotics sponsored SCOPE Project

For my Senior Capstone Program in Engineering project (SCOPE), my team partnered with Amazon Robotics to create an authonomous method of choosing the most effective end effector (gripper on the end of an arm) for any given object.

Autonomous Trail Robot

Retrofit an existing remote controlled off-road vehicle, the CyberKAT, with a hardware system running ROS2 to convert it to an autonomous robot capable of navigating trails. A final project for A Computational Introduction to Robotics at Olin College of Engineering.

Visual Odometry Using AprilTags

A package that can detect and visualize AprilTags and track the relative movement of a Neato robot based on the changing position relative to the camera of an AprilTag. A project for A Computational Introduction to Robotics at Olin College of Engineering.

Robot Localization

Localize a robot in a known map given a starting position and 2D LiDAR scan data. Uses a particle filter to accomplish the task. A project for A Computational Introduction to Robotics at Olin College of Engineering.

Invisible Map & Map Creator

iOS apps aimed to assist blind and visually impaired (BVI) people with indoor navigation. I worked on this project through the Olin College Crowdsourcing and Machine Learning (OCCaM) lab, an assistive technology focused lab run by Paul Ruvolo.

Point of No Return

A 2D infinite world survival thriller game programmed using Python's pygame library. A final project for Software Design at Olin College of Engineering. This project primarily developed skills in designing code architecture (Model View Controller structure).

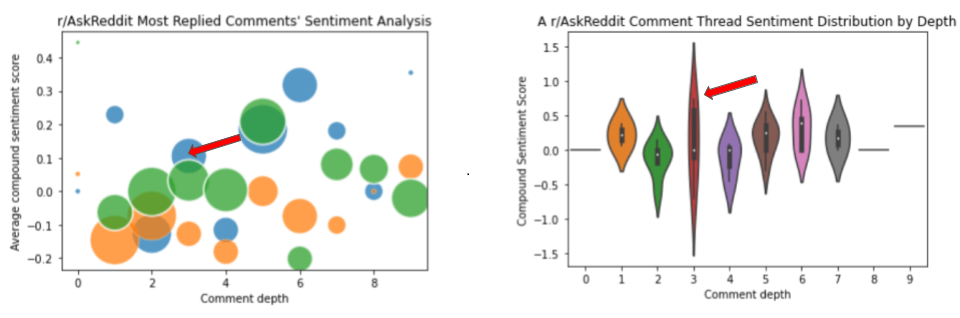

Reddit Sentiment Analysis

A data analysis project examining whether there is a connection between the calculated sentiment of an initial comment on a Reddit post and the sentiment of the subsequent replies. A midterm project for Software Design at Olin College of Engineering. The goal of the project was to practice skills in using APIs to obtain and organize data and using Python and Jupyter Notebooks to manipulate, visualize, and analyze the data.

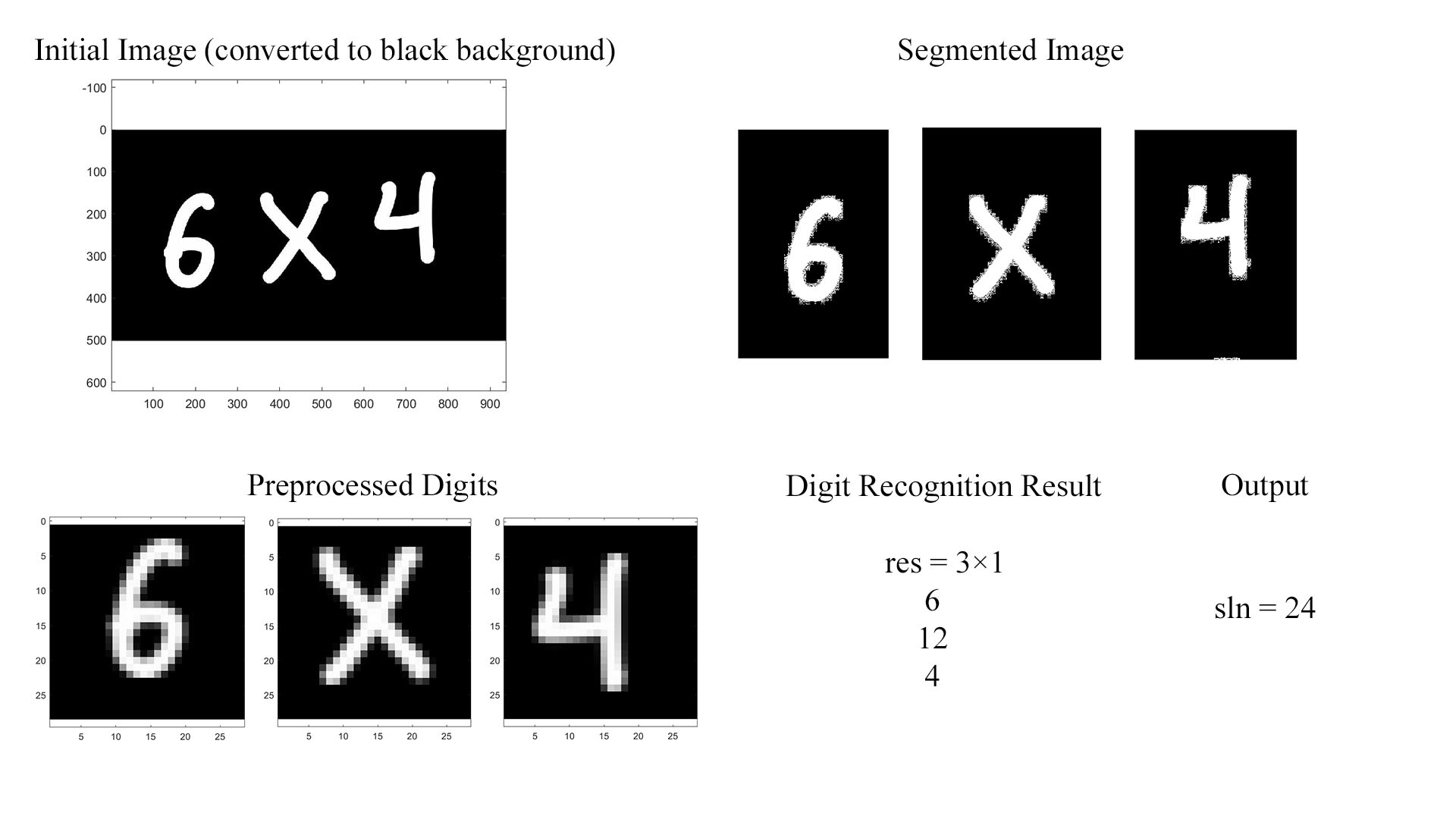

Handwritten Digit Recognition Algorithm

An algorithm written in Matlab to identify handwritten digits in an image and also perform rudimentary arithmetic. Our algorithm is a slight modification of the Eigenfaces facial recognition algorithm. A final project for Quantitative Engineering Analysis 1 at Olin College of Engineering.

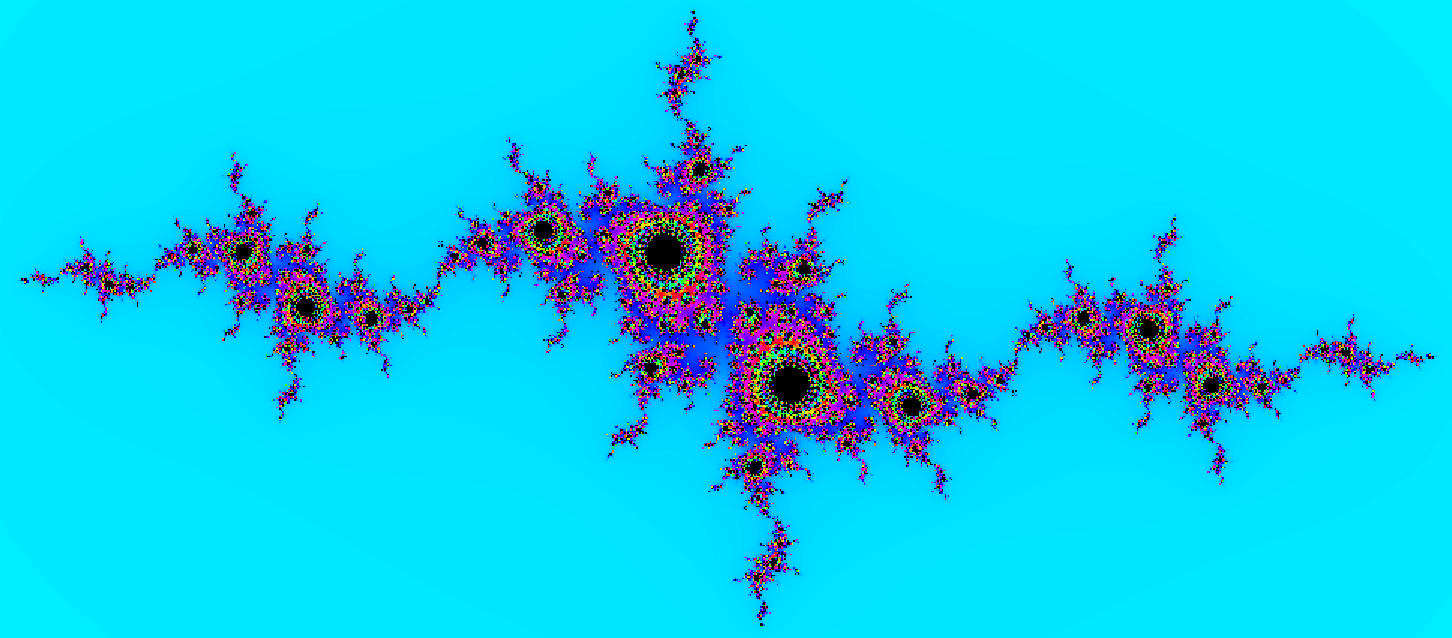

Julia Set Visualization

An interactive Java program to visualize the Julia Set. It includes menu objects to manipulate different parameters of the Julia Set equation, as as for panning and zooming across the visualization. The project was a way to get to know fractals as a mathematical concept on a basic level, while getting more familiar with Java GUI components. A project for Data Structures at South Brunswick High School.

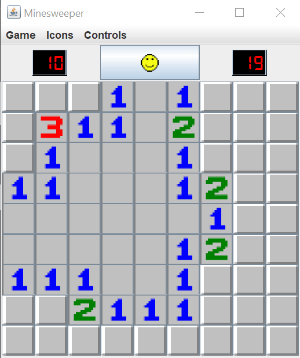

Minesweeper

A version of the popular classic Windows game Minesweeper coded in Java. It includes the standard three modes and has the timer and mines counter, as well as additional options to change the icons of the game. The main goal of this project was to get more familiar with basic game design and to develop skills with Java GUI elements. A project for Data Structures at South Brunswick High School.

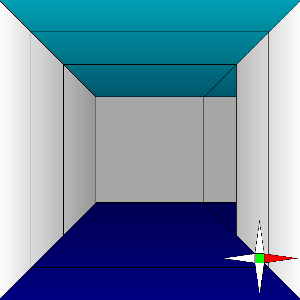

3D Maze Game

A 3D maze game where a player navigates through different levels, collecting items with the goal of making it to the end. The main goal of this project was to familiarize myself with Java GUI and challenge myself with mimicking perspective in 2D. A project for Data Structures at South Brunswick High School.